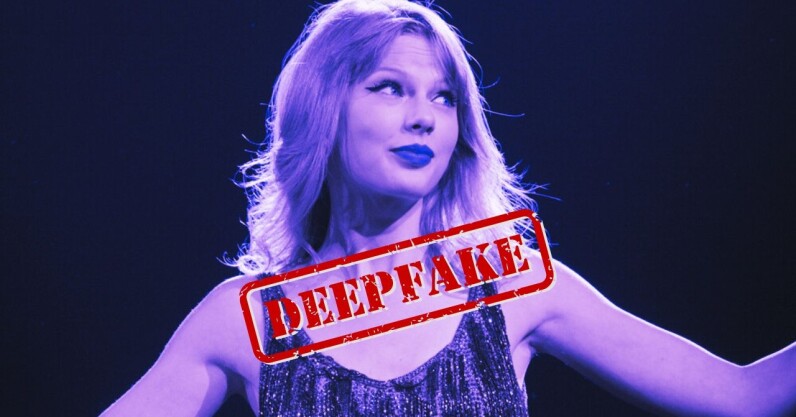

Recently, specific, non-consensual deepfake pictures of Taylor Swift flooded X, previously Twitter — among the videos acquired 47 million views before it was eliminated 17 hours later on.

In an effort to stop the circulation of the images, X prohibited searches like “Taylor Swift” or “Taylor Swift AI”. Just reorganizing the search from “Taylor Swift AI” to “Taylor AI Swift” yielded outcomes.

The social networks platform has actually come under fire for its slow reaction, which lots of blame on Elon Musk, who has actually cut 80% of the business’s material small amounts group because taking control of in 2022.

The deluge has actually stimulated outrage from fans and political leaders, who are rallying for more stringent laws to avoid the production and spread of non-consensual AI-generated porn and empower victims of these attacks to look for justice.

The United States presented an expense Tuesday that would criminalise the spread of non-consensual, sexualised images produced by AI. In the UK, the sharing of deepfake porn ended up being prohibited as part of the Online Safety Act in 2023.

In the EU, regardless of a number of inbound policies targeting AI and social media, there are no particular laws securing victims of non-consensual deepfake porn.

“Not sufficient is being done to punish the spread of hazardous false information like deepfakes,” Marcel WendtCTO and creator of Dutch online identity confirmation business Digidentity, informed TNW. “High-profile cases like these ought to function as a wake-up call to legislators– we require to make individuals more protected online.”

What’s the issue with deepfakes?

Deepfakes are incorrect images or videos created by deep knowing AI algorithms (thus the name). While some are innocent, the huge bulk are distinctly not.

Deepfake porn comprises 98% of all deepfake videos online. The bulk of these are of female stars whose images are being became pornography without their authorization, according to the State of Deepfakes report released in 2015.

A lot of the tools to produce deepfake pornography are free-and-easy to utilize, which has actually sustained a 550% boost in the volume of deepfakes online from 2019 to 2023. In some cases criminals share these deepfakes for simply raunchy functions, while other times the intent is to bug, obtain, upset, malignor humiliate the particular people.

While the very first wave of deepfake pornography targeted prominent females, nowadays Swift’s fans are as most likely to be targeted as she is. Schools throughout the world are coming to grips with the increase of AI nudes of, and often produced by, kids

Are these deepfakes prohibited?

In 2015, more than 20 teenage women in Spain gotten AI-generated naked pictures of themselves with photos, in which they were completely outfitted, drawn from their Instagram accounts. While flowing adult material with minors is prohibited, putting the face of a small into an adult image or video made by consenting grownups is a legal grey location.

“Since it is created by deepfake, the real personal privacy of the individual in concern is not impacted in the eyes of the law,” Manuel Cancio, teacher of criminal law at the Autonomous University of Madrid, informed Euronews following the case in Spain.

The only EU law straight attending to the issue is the Dutch Criminal Code. An arrangement in the law covers both genuine, along with non-real kid porn. This guideline is the exception rather than the guideline.

What about the suite of brand-new EU laws created to deal with whatever from false information to AI abuse online?

According to the Centre for Data Innovationwhile the Digital Services Actfor example, needs social networks platforms to much better flag and get rid of prohibited material, it stops working to categorize non-consensual deepfakes as prohibited.

The EU likewise controversially dropped a proposition in the DSA throughout last-minute settlements which would have needed pornography websites hosting user-generated material to quickly get rid of product flagged by victims as portraying them without approval.

Other laws, such as the upcoming AI Actneed developers to reveal deepfake material. Whether individuals understand a deepfake is one or not is not precisely the point– the image itself is what does the damage.

“The impact it has (on the victim) can be extremely comparable to a genuine naked image, however the law is one action behind,” Cancio mentioned.

Holding tech platforms to account

Controling images to trick individuals is absolutely nothing brand-new– even the ancient Egyptian pharaoh Hatshepsut depicted herself in statues and paintings as a male in order to win favour with her constituents.

The surge of AI tools to develop images and videos at the touch of the button over the previous couple of years is altering the video game, and they are being utilized for far more dubious functions than battling patriarchal prejudgments.

Simply take the ClothOff app. It enables users to take the clothing off from anybody who appears in their phone’s image gallery. It costs EUR10 to develop 25 naked images, and is thought to be the tool utilized in the Spanish deepfake scandal pointed out formerly.

The software application utilized for the Taylor Swift images was most likely Microsoft Designer. In a loophole the tech giant has considering that coveredusers might produce pictures of stars on the platform by composing triggers like “taylor ‘vocalist’ swift” or “jennifer ‘star’ aniston.”

The most popular generative AI generators have guardrails in location to avoid the production of hazardous deepfakes– although users are constantly discovering brand-new methods to trick them. Perpetrators likewise utilize lower recognized, open-source generative AI tools that are more difficult to manage.

“While it’s tough to avoid the development of deepfakes, it is a lot simpler to avoid their spread,” stated Wendt from Digidentity“Social media platforms like X require to do a lot more to flag and get rid of damaging material before it spreads out.”

Wendt supporters for a digital identifier for all social networks accounts, so that when you produce an account on platforms like X, Facebookor Instagram it can be connected to your government-issued ID. Provided huge tech’s performance history on trust and security however, requiring users to check in with their genuine ID appears like a castle in the air at present.

What we can do now

While this all may look like doom and gloom, there are twinkles of hope.

Something the DSA will do is put pressure on huge tech to enhance online security on their platforms. If they do not, they might be in for a great worth 6% of their international income or be prohibited from the union totally.

And while not best, the inbound AI Act sets an international precedent where digital principles and security are vital and provides a legal springboard for future steps to counter the ever-evolving world of deepfakes.

In the UK, the Online Safety Act provides brand-new powers to do something about it where deepfake porn is worried and the offense brings an optimal two-year prison sentence. There are likewise criminal offenses for platforms who host such material.

Innovation will likewise play a crucial function in taking on and tracing deepfakes, through much better authentication systems, the execution of digital watermarking, and blockchain. These innovations will assist accredit material credibility and make sure safe and secure record of digital deals, advantages that will make all of us more safe and secure online.

“What we require is a thorough, multi-dimensional worldwide cooperation technique stressing policy, innovation, and security,” Mark Minevich, author of Our Planet Powered by AI and a UN consultant on AI innovation, informed TNW.

“This will not just challenge the instant obstacles of non-consensual deepfakes however likewise sets a structure for a digital environment characterised by trust, openness, and sustaining security,” he stated.