Diffusion designs produce top quality images however need lots of forward passes. MIT researchers have actually developed a one-step AI image generator that streamlines the several procedures of conventional diffusion designs into a single action.

In a single action, it creates images 30 times quicker. This is achieved by training a brand-new computer system design to mimic the actions of more complex, initial designs that produce images. This is an example of a teacher-student design. The technique, called circulation matching distillation (DMD), produces images substantially faster while keeping the quality of the images

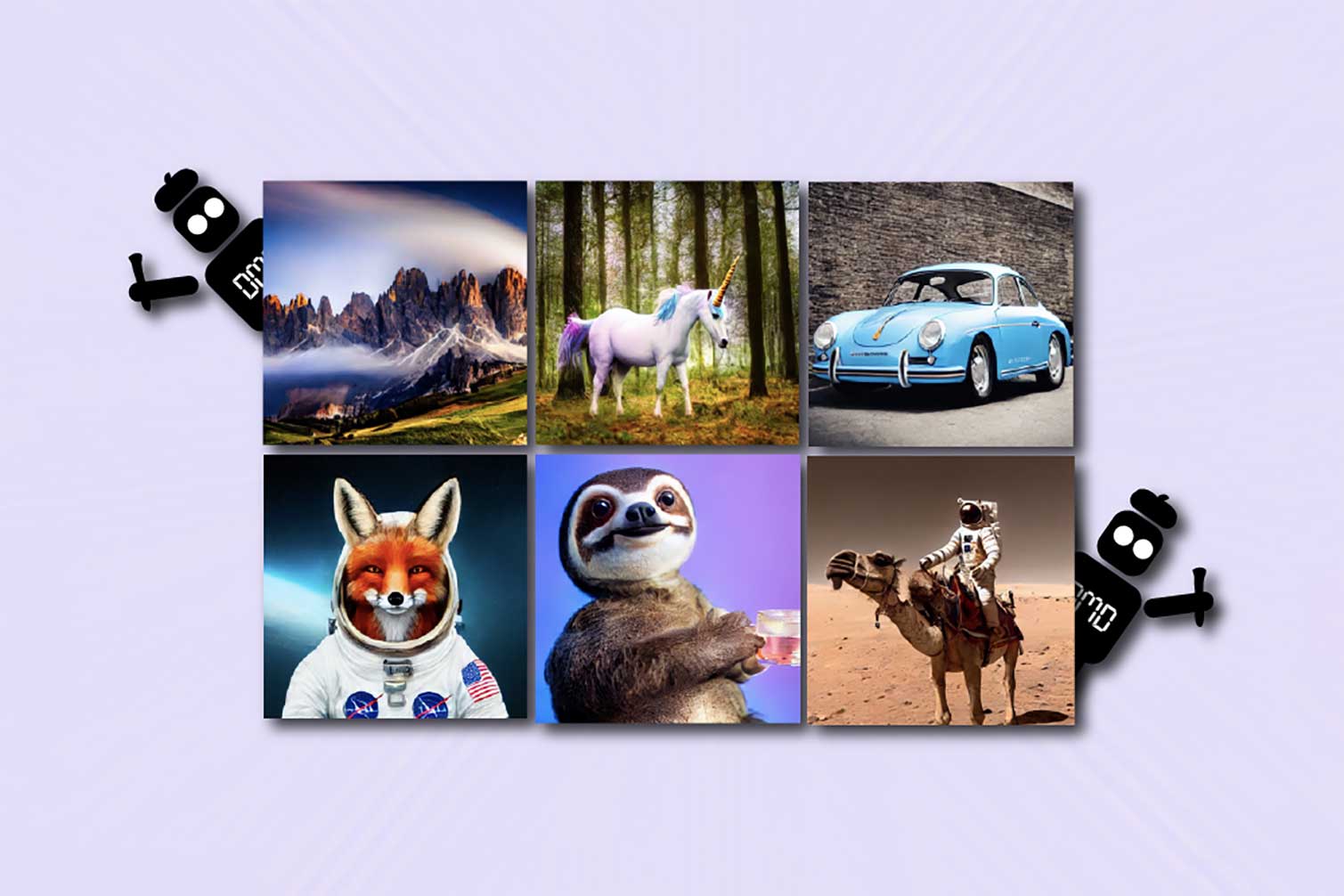

This work reveals an unique approach to enhance existing diffusion designs like Stable Diffusion and DALLE-3 by 30 times. It lowers calculating time and keeps, if not exceeds, the quality of the produced visual material.

The approach integrates the concepts of diffusion designs and generative adversarial networks (GANs) to produce visual material in a single action, instead of the hundred phases of iterative refining that diffusion designs presently need. It can be a new generative modeling strategy with extraordinary speed and quality.

The DMD approach has 2 elements:

- Regression loss: Anchors the mapping to guarantee a coarse company of the area of images to make training more steady.

- Circulation coordinating loss: This guarantees that the likelihood of creating an offered image with the trainee design represents its real-world incident frequency.

To achieve this, 2 diffusion designs are utilized as standards. These allow the system to compare created and genuine images and train the fast one-step generator.

The system accomplishes much faster production by training a brand-new network to minimize the circulation divergence in between its produced images and those from the training dataset used by timeless diffusion designs.

Tianwei Yin, an MIT PhD in electrical engineering and computer technology, CSAIL affiliate, and the lead scientist on the DMD structure, stated,“Our essential insight is to approximate gradients that assist the enhancement of the brand-new design utilizing 2 diffusion designs. In this method, we boil down the understanding of the initial, more complicated design into the easier, quicker one while bypassing the infamous instability and mode collapse concerns in GANs.”

The pre-train networks were utilized for the brand-new trainee design, streamlining the whole procedure. Researchers then copied and fine-tuned the specifications from the initial designs to accomplish quick training merging of the most recent design. Their brand-new design can produce premium images with the exact same architectural structure.

The DMD revealed constant efficiency when researchers checked the design versus typical techniques.

DMD is the very first one-step diffusion strategy that produces images on par with those from the initial, more complicated designs on the popular criteria of producing images based upon particular classes on ImageNet. It accomplishes a remarkably close Fréchet creation range (FID) rating of simply 0.3, which is notable considering that FID examines the quality and variety of created images.

DMD is likewise excellent at:

- Industrial-scale text-to-image generation.

- Accomplishes modern one-step generation efficiency.

There is an intrinsic connection in between the effectiveness of the DMD-generated images and the mentor design’s capabilities throughout the distillation procedure. In the present type, which utilizes Stable Diffusion v1.5 as the instructor design, the trainee acquires restraints, such as drawing little faces and comprehensive representations of text, suggesting that more advanced instructor designs might enhance DMD-generated images even further.

Fredo Durand, MIT teacher of electrical engineering and computer technology and CSAIL primary private investigator, stated,“Decreasing the variety of versions has actually been the Holy Grail in diffusion designs because their beginning.”

“We are really thrilled to lastly allow single-step image generation, which will considerably lower calculate expenses and speed up the procedure.”

Alexei Efros, a teacher of electrical engineering and computer technology at the University of California at Berkeley who was not associated with this research study stated“Finally, a paper that effectively integrates the flexibility and high visual quality of diffusion designs with the real-time efficiency of GANs. I anticipate this work to open wonderful possibilities for top quality real-time visual modifying.”

Journal Reference:

- Tianwei Yin, Michaël Gharbi, Richard Zhang, Eli Shechtman, Fredo Durand, William T. Freeman, Taesung Park. One-step Diffusion with Distribution Matching Distillation. DOI: 10.48550/ arXiv.2311.18828