Nvidia is the Taylor Swift of tech business.

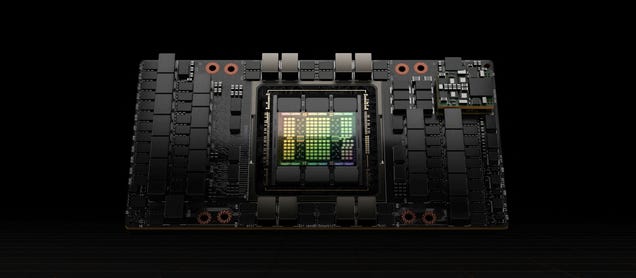

Its AI consumers pay out $40,000 for innovative chips and often wait months for the business’s tech, staying devoted even as completing options launching. That commitment originates from Nvidia being the greatest AI chipmaker video game in the area. There are likewise significant technical factors that keep users coming back.

It’s not basic to switch out one chip for another– business develop out their AI items to the requirements of those chips. Changing to a various alternative might suggest returning and reconfiguring AI designs, a lengthy and costly pursuit. It’s likewise hard to blend and match various kinds of chips. And it’s not simply the hardware: CUDA, Nvidia’s software application to manage AI chips called GPUs, works truly well, stated Ray Wang, CEO of Silicon Valley-based Constellation Research. Wang stated that assists reinforce Nvidia’s market supremacy.

“It’s not like there’s a lock-in,” he stated. “It’s simply that no one’s truly invested a long time to state, ‘Hey, let’s go construct something much better.'”

That might be altering. Over the last 2 weeks, tech business have actually begun coming for Nvidia’s lunchwith Facebook moms and dad Meta Google moms and dad Alphabet, and AMD all exposing brand-new or upgraded chips. Others consisting of Microsoft and Amazon have actually likewise made current statements about homegrown chip items.

While Nvidia is not likely to be unseated anytime quicklythese efforts and others might threaten the business’s approximated 80% market share by selecting at a few of the chipmaker’s weak points, benefiting from an altering community– or both.

Various chips are much better at various AI jobs, however changing in between different alternatives is a headache for designers. It can even be hard for various designs of the exact same chip, Wang stated. Structure software application that can play good in between a range of chips produces brand-new chances for rivals, with Wang indicating oneAPI as a start-up currently dealing with an item easily.

“People are gon na find out in some cases they require CPUs, in some cases they require GPUs, and often they require TPUs, and you’re gon na get systems that really stroll you through all 3 of those,” Wang stated– describing main processing systems, graphics processing systems, and tensor processing systems, 3 various sort of AI chips.

In 2011, investor Marc Andreessen notoriously announced that software application was consuming the worldThat still applies in 2024 when it pertains to AI chips, which are progressively driven by software application development. The AI chip market is going through a familiar shift with echoes in telecoms, in which service clients moved from depending on numerous hardware elements to incorporated software application services, stated Jonathan Rosenfeld, who leads the FundamentalAI group at MIT FutureTech.

“If you take a look at the real advances in hardware, they’re not from Moore’s Law or anything like that, not even from another location,” stated Rosenfeld, who is likewise co-founder and CTO of the AI health care start-up somite.ai

This advancement points towards a future in which software application plays a vital function in enhancing throughout various hardware platforms, minimizing reliance on any single service provider. While Nvidia’s CUDA has actually been an effective tool at the single-chip level, a shift to a software-dependent landscape needed by huge designs that cover lots of GPUs will not always benefit the business.

“We’re most likely visiting combination,” Rosenfeld stated. “There are lots of entrants and a great deal of cash and certainly a great deal of optimization that can occur.”

Rosenfeld does not see a future without Nvidia as a significant force in training AI designs like ChatGPT. Training is how AI designs discover how to do jobs, while reasoning is when they utilize that understanding to carry out actions, such as reacting to concerns users ask a chatbot. The computing requires for those 2 actions stand out, and while Nvidia is appropriate for the training part of the formula, the business’s GPUs aren’t rather as established for reasoning.

Regardless of that, reasoning represented an approximated 40% of the business’s data-center earnings over the previous year, Nvidia stated in its newest revenues report

“Frankly, they’re much better at training,” stated Jonathan Ross, the CEO and creator of Groq, an AI chip start-up. “You can’t construct something that’s much better at both.”

Training is where you invest cash, and reasoning is expected to be where you earn money, Ross stated. Business can be shocked when an AI design is put into production and takes more calculating power than anticipated– consuming into revenues.

Plus, GPUs, the primary chip Nvidia produces, aren’t especially fast at spitting out responses for chatbots. While designers will not see a little lag or hold-up throughout a month-long training, individuals utilizing chatbots desire actions as quick as possible.

Ross, who formerly worked on chips at Google, began Groq to construct chips called Language Processing Units (LPUs), which are constructed particularly for reasoning. A third-party test from Synthetic Analysis discovered that ChatGPT might run more than 13 times much faster if it was utilizing Groq’s chips.

Ross does not see Nvidia as a rival, although he jokes that consumers frequently discover themselves went up in the line to get Nvidia chips after purchasing from Groq. He sees them more as an associate in the area– doing the training while Groq does the reasoning. Ross stated Groq might assist Nvidia offer more chips.

“The more individuals lastly begin generating income on reasoning,” he stated, “the more they’re going to invest in training.”