Experiences in 21st century guideline–

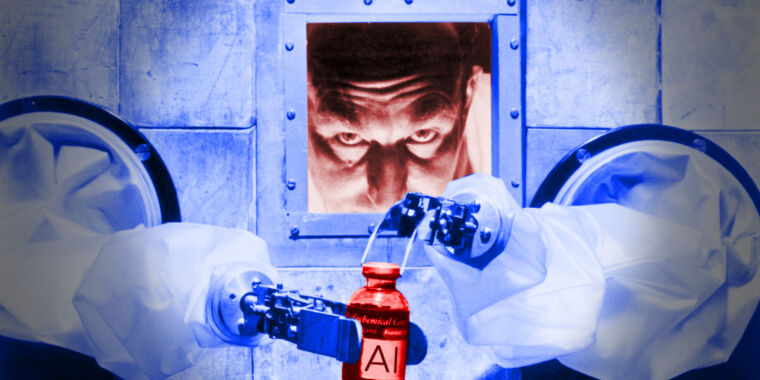

CEO-heavy board to take on evasive AI security idea and use it to United States facilities.

–

On Friday, the United States Department of Homeland Security revealed the development of an Artificial Intelligence Safety and Security Board that includes 22 members pulled from the tech market, federal government, academic community, and civil liberties companies. Offered the ambiguous nature of the term “AI,” which can use to a broad spectrum of computer system innovation, it’s uncertain if this group will even have the ability to settle on exactly what they are protecting us from.

President Biden directed DHS Secretary Alejandro Mayorkas to develop the board, which will fulfill for the very first time in early May and consequently on a quarterly basis.

The essential presumption presented by the board’s presence, and shown in Biden’s AI executive order from Octoberis that AI is a naturally dangerous innovation which American residents and services require to be safeguarded from its abuse. Along those lines, the objective of the group is to assist defend against foreign foes utilizing AI to interfere with United States facilities; establish suggestions to make sure the safe adoption of AI tech into transport, energy, and Internet services; foster cross-sector partnership in between federal government and companies; and develop an online forum where AI leaders to share info on AI security threats with the DHS.

It’s worth keeping in mind that the ill-defined nature of the term “Artificial Intelligence” does the brand-new board no prefers concerning scope and focus. AI can indicate various things: It can power a chatbot, fly an aircraft, manage the ghosts in Pac-Mancontrol the temperature level of an atomic power plant, or play a terrific video game of chess. It can be all those things and more, and because a number of those applications of AI work really in a different way, there’s no warranty any 2 individuals on the board will be thinking of the very same kind of AI.

This confusion is shown in the quotes supplied by the DHS news release from brand-new board members, a few of whom are currently discussing various kinds of AI. While OpenAI, Microsoft, and Anthropic are generating income from generative AI systems like ChatGPT based upon big language designs (LLMs), Ed Bastian, the CEO of Delta Air Lines, describes completely various classes of artificial intelligence when he states, “By driving ingenious tools like team resourcing and turbulence forecast, AI is currently making considerable contributions to the dependability of our country’s flight system.”

Specifying the scope of what AI precisely indicates– and which applications of AI are brand-new or hazardous– may be one of the essential obstacles for the brand-new board.

A roundtable of Big Tech CEOs brings in criticism

For the inaugural conference of the AI Safety and Security Board, the DHS chose a tech industry-heavy group, occupied with CEOs of 4 significant AI suppliers (Sam Altman of OpenAI, Satya Nadella of Microsoft, Sundar Pichai of Alphabet, and Dario Amodei of Anthopic), CEO Jensen Huang of leading AI chipmaker Nvidia, and agents from other significant tech business like IBM, Adobe, Amazon, Cisco, and AMD. There are likewise representatives from huge aerospace and air travel: Northrop Grumman and Delta Air Lines.

Upon checking out the statement, some critics disagreed with the board structure. On LinkedIn, creator of The Distributed AI Research Institute (DAIR) Timnit Gebru particularly slammed OpenAI’s existence on the board and composed“I’ve now seen the complete list and it is amusing. Foxes securing the hen home is an understatement.”