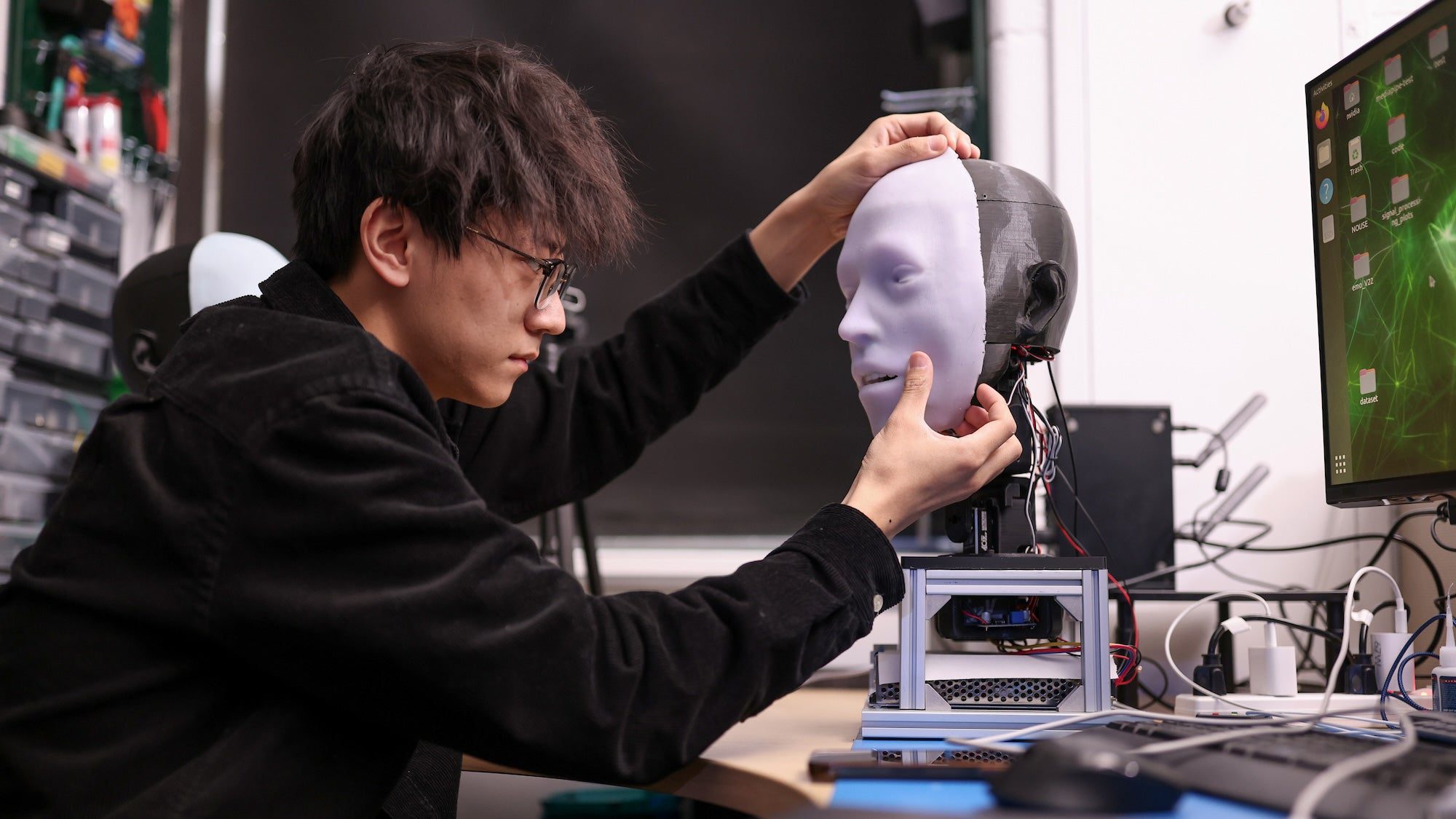

The bot’s head and face are created to replicate facial interactions in discussion with human beings.

Andrew Paul

|

Released Mar 29, 2024 10:00 AM EDT

If you desire your humanoid robotic to reasonably imitate facial expressionsit’s everything about timing. And for the previous 5 years, engineers at Columbia University’s Imaginative Machines Lab have actually been developing their robotic’s reflexes to the millisecond. Their outcomes, detailed in a brand-new research study released in Science Roboticsare now readily available to see on your own.

Meet Emothe robotic head efficient in preparing for and matching human facial expressions, consisting of smiles, within 840 milliseconds. Whether or not you’ll be left smiling at the end of the presentation video stays to be seen.

AI is getting respectable at imitating human discussions–heavy focus on”imitating” When it comes to noticeably estimating feelings, their physical robotics equivalents still have a lot of reaching doA device misjudging when to smile isn’t simply uncomfortable– it accentuates its artificiality.

Human brains, in contrast, are exceptionally proficient at translating substantial quantities of visual hints in real-time, and after that reacting appropriately with numerous facial motions. Apart from making it very challenging to teach AI-powered robotics the subtleties of expression, it’s likewise tough to develop a mechanical face efficient in reasonable muscle motions that do not drift into the incredible.

[Related:[Related:Please reconsider before letting AI scan your penis for STIs]

Emo’s developers try to resolve a few of these problems, or at least, assist narrow the space in between human and robotic expressivity. To build their brand-new bot, a group led by AI and robotics professional Hod Lipson initially developed a sensible robotic human head that consists of 26 different actuators to allow small facial expression functions. Each of Emo’s students likewise consisted of high-resolution electronic cameras to follow the eyes of its human discussion partner– another essential, nonverbal visual hint for individuals. Lipson’s group layered a silicone “skin” over Emo’s mechanical parts to make it all a little less. you understand, weird.

From there, scientists constructed 2 different AI designs to operate in tandem– one to forecast human expressions through a target face’s small expressions, and another to rapidly provide motor reactions for a robotic face. Utilizing sample videos of human facial expressions, Emo’s AI then discovered psychological complexities frame-by-frame. Within simply a couple of hours, Emo can observing, translating, and reacting to the little facial shifts individuals tend to make as they start to smile. What’s more, it can now do so within about 840 milliseconds.

“I believe forecasting human facial expressions properly is a transformation in[human-robotinteractions”YuhangHuColumbiaEngineeringPhDtraineeandresearchstudyleadauthor[human-robotinteractions”YuhangHuColumbiaEngineeringPhDstudentandstudyleadauthorstated previously today“Traditionally, robotics have actually not been created to think about people’ expressions throughout interactions. Now, the robotic can incorporate human facial expressions as feedback.”

Now, Emo does not have any spoken analysis abilities, so it can just engage by examining human facial expressions. Lipson, Hu, and the rest of their partners intend to quickly integrate the physical capabilities with a big language design system such as ChatGPT. If they can achieve this, then Emo will be even more detailed to natural(ish) human interactions. Naturally, there’s a lot more to relatability than smiles, smirks, and smiles, which the researchers seem concentrating on. (“The imitating of expressions such as sulking or frowning ought to be approached with care due to the fact that these might possibly be misinterpreted as mockery or communicate unintentional beliefs.”) At some point, the future robotic overlords might require to understand what to do with our grimaces and frowns.