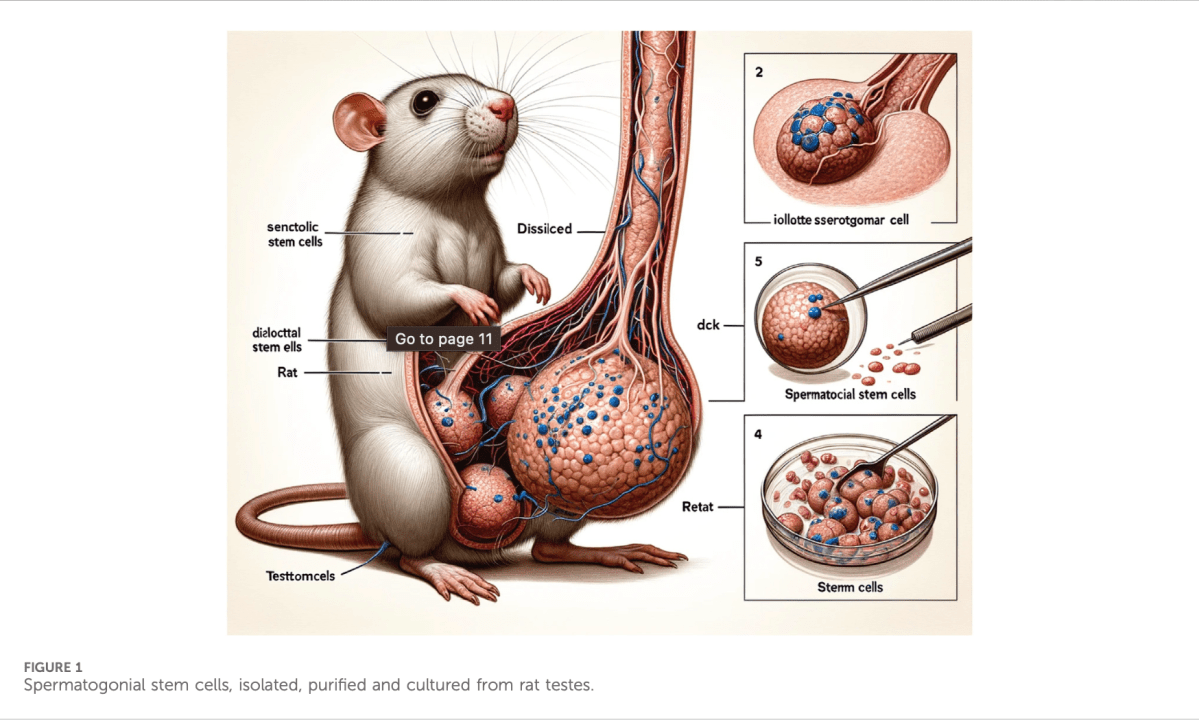

An open gain access to clinical journal, Frontiers in Cell and Developmental Biologywas honestly slammed and buffooned by scientists on social networks today after they observed the publication had actually just recently set up an short article consisting of images with mumbo jumbo descriptions and diagrams of anatomically inaccurate mammalian testicles and sperm cells, which bore indications of being developed by an AI image generator.

The publication has actually given that reacted to among its critics on the social media network X, publishing from its confirmed account: “We thank the readers for their analysis of our short articles: when we get it incorrect, the crowdsourcing dynamic of open science suggests that neighborhood feedback assists us to rapidly fix the record.” It has actually likewise gotten rid of the postentitled”Cellular functions of spermatogonial stem cells in relation to JAK/STAT signaling pathfrom its site and released a retraction notification, mentioning:

“Following publication, issues were raised concerning the nature of its AI-generated figures. The post does not fulfill the requirements of editorial and clinical rigor for Frontiers in Cell and Development Biology; for that reason, the short article has actually been withdrawed.

This retraction was authorized by the Chief Executive Editor of Frontiers. Frontiers want to thank the worried readers who called us relating to the released post.

VB Event

The AI Impact Tour– NYC

We’ll remain in New York on February 29 in collaboration with Microsoft to talk about how to stabilize dangers and benefits of AI applications. Ask for a welcome to the special occasion listed below.

Ask for a welcome

Misspelled words and anatomically inaccurate illustrations

VentureBeat has actually acquired a copy and republished the initial short article listed below in the interest of keeping the public record of it.

As you can observe in it, it includes a number of graphics and illustrations rendered in an apparently clear and vibrant clinical design, however focusing, there are numerous misspelled words and misshapen letters, such as “protemns” rather of “proteins,” for instance, and a word spelled “zxpens.”

Possibly most troublesome is the image of “rat” (spelled properly) which appears initially in the paper, and reveals a huge development in its groin area.

Blasted on X

Soon after the paper’s publication on February 13, 2024, scientists required to X to call it out and concern how it made it through peer evaluation.

It’s lastly taken place. A peer-reviewed journal post with what seem ridiculous AI produced images. This threatens. pic.twitter.com/Ez54H6l7iZ

–? Kareem Carr|Statistician? (@kareem_carr) February 15, 2024

The paper is authored by Xinyu Guo and Dingjun Hao of the Department of Spine Surgery, Hong Hui Hospital at Xi’an Jiaotong University; along with Liang Dong of the Department of Spine Surgery, Xi’an Honghui Hospital in Xi’an, China.

It was evaluated by Binsila B. Krishnan of the National Institute of Animal Nutrition and Physiology (ICAR) in India and Jingbo Dai of Northwestern Medicine in the United States, and modified by Arumugam Kumaresan at the National Dairy Research Institute (ICAR) in India.

VentureBeat connected to all the authors and editors of the paper, along with Amanda Gay Fisher, the journal’s Field Chief Editor, and a teacher of biochemistry at the distinguished Oxford University in the UK, to ask additional concerns about how the post was released, and will upgrade when we hear back.

Uncomfortable broader ramifications for AI’s effect on science, research study, and medication

AI has actually been promoted as an important tool for advancing clinical research study and discovery by a few of its makers, consisting of Google with its AlphaFold protein structure predictor and products science AI GNoME, just recently covered favorably by the press (consisting of VentureBeat) for finding 2 million brand-new products

Those tools are focused on the research study side. When it pertains to releasing that research study, it is clear that AI image generators might posture a significant risk to clinical precision, specifically if scientists are utilizing them indiscriminately, to cut corners and release much faster, or due to the fact that they are harmful or merely do not care.

The transfer to utilize AI to produce clinical illustrations or diagrams is bothering due to the fact that it weakens the precision and trust amongst the clinical neighborhood and larger public that the work entering into crucial fields that affect our lives and health– such as medication and biology– is precise, safe, and evaluated.

It might likewise be the item of the larger “release or die” environment that has actually emerged in science over the last a number of years, in which scientists have actually confirmed they feel the requirement to hurry out documents of little worth in order to reveal they are contributing something, anythingto their field, and reinforce the variety of citations credited to them by others, padding their resumes for future tasks.

Likewise, let’s be truthful– some of these scientists on this paper work in spinal column surgical treatment at a human health center: would you trust them to run on your spinal column or aid with your back health?

And with more than 114,000 citations to its name, the journal Frontiers in Cell and Developmental Biology has now had its stability of all of them cast doubt on by this lapse: the number of more documents released by it have AI-illustrated diagrams that have slipped through the evaluation procedure?

Intriguingly, Frontiers in Cell and Developmental Biology belongs to the broader Frontiers business of more than 230 various clinical publications established in 2007 by neuroscientists Kamila Markram and Henry Markram, the previous of whom is still noted as CEO.

The business states its “vision [is] to make science open, peer-review extensive, transparent, and effective and harness the power of innovation to genuinely serve scientists’ requirements,” and in truth, a few of the tech it utilizes is AI for peer evaluation.

As Frontiers declared in a 2020 news release:

In a market initially, Artificial Intelligence (AI) is being released to assist evaluate research study documents and help in the peer-review procedure. The cutting edge Artificial Intelligence Review Assistant (AIRA), established by open-access publisher Frontiers, assists editors, customers and authors assess the quality of manuscripts. AIRA checks out each paper and can presently make up to 20 suggestions in simply seconds, consisting of the evaluation of language quality, the stability of the figures, the detection of plagiarism, along with determining possible disputes of interest.

The business’s site notes AIRA debuted in 2018 as”The next generation of peer evaluation in which AI and artificial intelligence make it possible for more strenuous quality assurance and effectiveness in the peer evaluation”

And simply last summer season, a post and video including Mirjam Eckertprimary releasing officer at Frontiersspecified:

At Frontiers, we use AI to assist construct that trust. Our Artificial Intelligence Review Assistant (AIRA) validates that clinical understanding is precisely and truthfully provided even before our individuals choose whether to evaluate, back, or release the term paper which contains it.

AIRA checks out every research study manuscript we get and makes up to 20 checks a 2nd. These checks cover, to name a few things, language quality, the stability of figures and images, plagiarism, and disputes of interest. The outcomes offer editors and customers another viewpoint as they choose whether to put a term paper through our extensive and transparent peer evaluation.

Frontiers has actually likewise gotten positively protection of its AI post evaluation assistant AIRA in such significant publications as The New York Times and Financial Times

Plainly, the tool wasn’t able to successfully capture these ridiculous images in the short article, causing its retraction (if it was utilized at all in this case). It likewise raises concerns about the capability of such AI tools to find, flag, and eventually stop the publication of incorrect clinical info– and the growing occurrence of its usage at Frontiers and in other places throughout the publishing community. Maybe that is the risk of being on the “frontier” of a brand-new innovation motion such as AI– the threat of it failing is greater than with the “attempted and real,” human-only or analog technique.

VentureBeat likewise counts on AI tools for image generation and some text, however all posts are examined by human reporters prior to publication. AI was not utilized by VentureBeat in the writing, reporting, showing or publishing of this short article.

VentureBeat’s objective is to be a digital town square for technical decision-makers to acquire understanding about transformative business innovation and negotiate. Discover our Briefings.