The upgraded AI design can now do some seriously outstanding things with long videos or text.

Google DeepMind today introduced the next generation of its effective expert system design Gemini, which has actually an improved capability to deal with big quantities of video, text, and images.

It’s a development from the 3 variations of Gemini 1.0 that Google revealed back in Decembervarying in size and intricacy from Nano to Pro to Ultra. (It presented Gemini 1.0 Pro and 1.0 Ultra throughout a lot of its items recently.) Google is now launching a sneak peek of Gemini 1.5 Pro to choose designers and company consumers. The business states that the mid-tier Gemini 1.5 Pro matches its previous top-tier design, Gemini 1.0 Ultra, in efficiency, however utilizes less computing power (yes, the names are puzzling!).

Most importantly, the 1.5 Pro design can deal with much bigger quantities of information from users, consisting of the size of triggers. While every AI design has a ceiling of just how much information it can absorb, the basic variation of the brand-new Gemini 1.5 Pro can manage inputs as big as 128,000 tokens, which are words or parts of words that an AI design breaks inputs into. That’s on a par with the very best variation of GPT-4 (GPT-4 Turbo).

A minimal group of designers will be able to send up to 1 million tokens to Gemini 1.5 Pro, which corresponds to approximately 1 hour of video, 11 hours of audio, or 700,000 words of text. That’s a substantial dive that makes it possible to do things that no other designs are presently efficient in.

In one presentation video revealed by Google, utilizing the million-token variation, scientists fed the design a 402-page records of the Apollo moon landing objective. They revealed Gemini a hand-drawn sketch of a boot, and asked it to recognize the minute in the records that the illustration represents.

“This is the minute Neil Armstrong arrived on the moon,” the chatbot reacted properly. “He stated, ‘One little action for male, one huge leap for humanity.'”

The design was likewise able to determine minutes of humor. When asked by the scientists to discover an amusing minute in the Apollo records, it selected when astronaut Mike Collins described Armstrong as “the Czar.” (Probably not the very best line, however you understand).

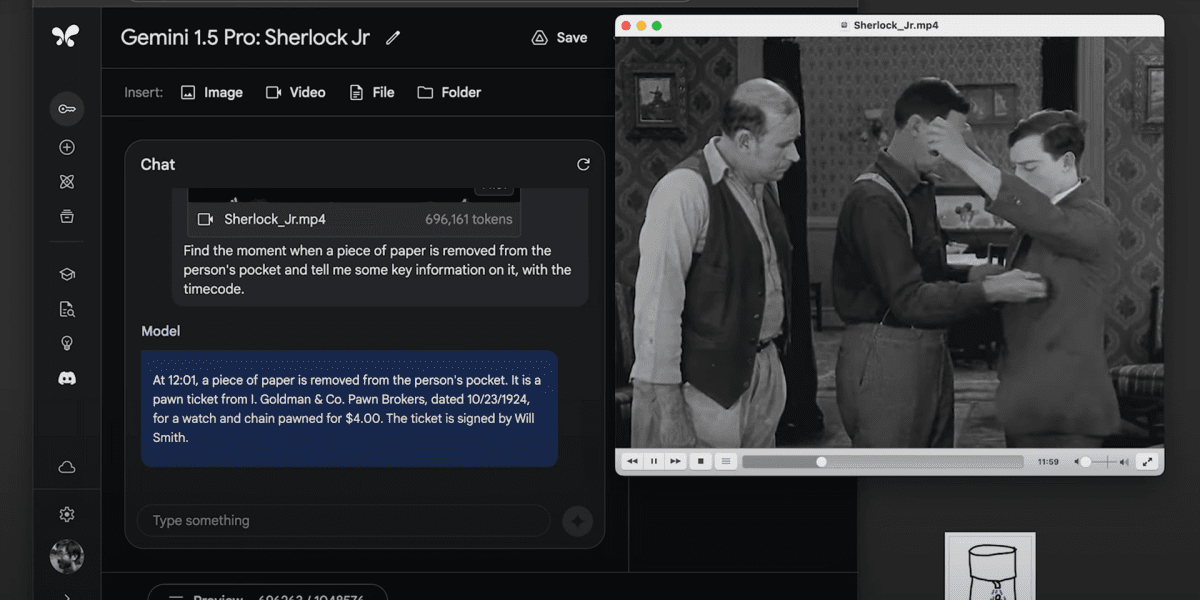

In another presentation, the group submitted a 44-minute quiet movie including Buster Keaton and asked the AI to recognize what details was on a notepad that, eventually in the film, is gotten rid of from a character’s pocket. In less than a minute, the design discovered the scene and properly remembered the text composed on the paper. Scientists likewise duplicated a comparable job from the Apollo experiment, asking the design to discover a scene in the movie based upon an illustration, which it finished.

Google states it put Gemini 1.5 Pro through the normal battery of tests it utilizes when establishing big language designs, consisting of assessments that integrate text, code, images, audio and video. It discovered that 1.5 Pro outshined 1.0 Pro on 87% of the standards and basically matched 1.0 Ultra throughout all of them while utilizing less computing power.

The capability to manage bigger inputs, Google states, is an outcome of development in what’s called mixture-of-experts architecture. An AI utilizing this style divides its neural network into pieces, just triggering the parts that pertain to the job at hand, instead of shooting up the entire network at the same time. (Google is not alone in utilizing this architecture; French AI company Mistral launched a design utilizing it, and GPT-4 is reported to use the tech also.)

“In one method it runs similar to our brain does, where not the entire brain triggers all the time,” states Oriol Vinyals, a deep knowing group lead at DeepMind. This separating conserves the AI computing power and can create reactions quicker.

“That type of fluidity going back and forth throughout various methods, and utilizing that to browse and comprehend, is extremely remarkable,” states Oren Etzioni, previous technical director of the Allen Institute for Artificial Intelligence, who was not associated with the work. “This is things I have actually not seen before.”

An AI that can run throughout techniques would more carefully look like the manner in which humans act. “People are naturally multimodal,” Etzioni states, since we can easily change in between speaking, composing, and drawing images or charts to communicate concepts.

Etzioni warned versus taking too much significance from the advancements. “There’s a well-known line,” he states. “Never rely on an AI demonstration.”

For one, it’s unclear just how much the presentation videos overlooked or cherry-picked from different jobs (Google certainly got criticism for its early Gemini launch for not divulging that the video was accelerated). It’s likewise possible the design would not have the ability to duplicate a few of the presentations if the input phrasing were a little fine-tuned. AI designs in basic, states Etzioni, are breakable.

Today’s release of Gemini 1.5 Pro is restricted to designers and business clients. Google did not define when it will be offered for broader release.